報告者:廖志唯

報告PPT:https://drive.google.com/file/d/1Ei73pblt6MI_-je5OZjSEgJ-VjEl5zmb/view?usp=sharing

文獻題目:THE AMERICA PROJECT

文獻作者:Paul Vanouse

文獻來源:http://www.paulvanouse.com/ap/

https://vimeo.com/372808350

https://www.youtube.com/watch?v=dSQQ67oCHXs

摘要:

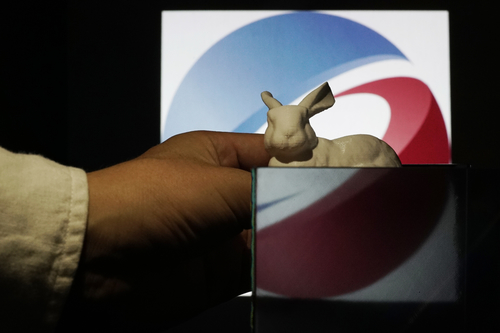

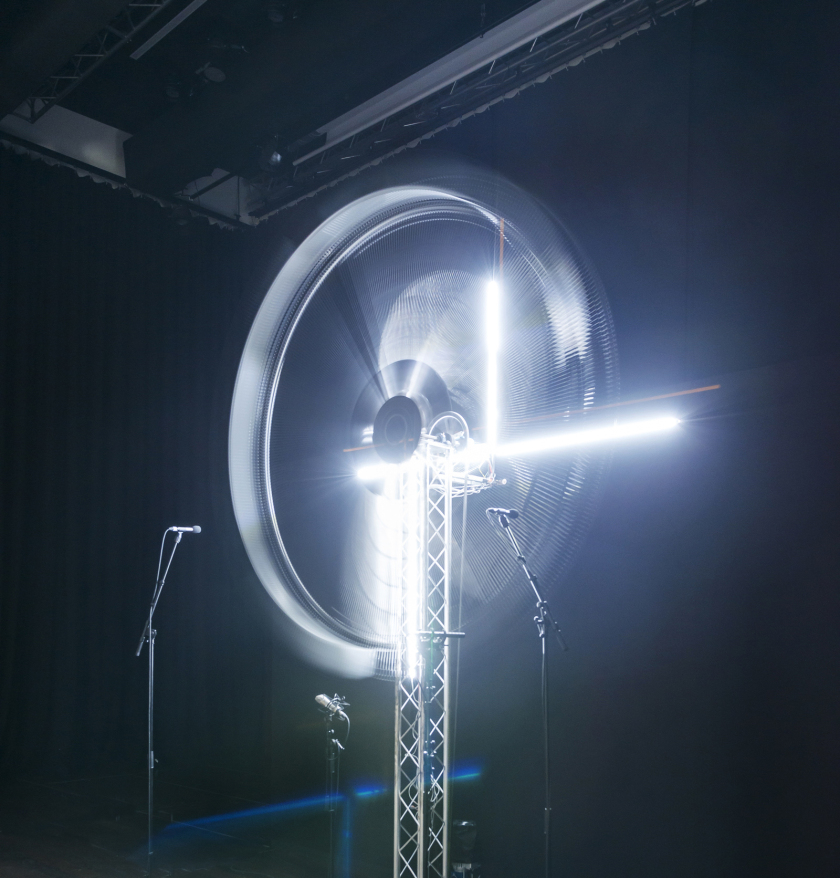

*The America Project* is a biological art installation centered around a process called “DNA gel electrophoresis,” AKA “DNA Fingerprinting,” a process I’ve appropriated to produce recognizable images. Audiences first encounter what resembles a human-scale fountain or decanter, which is actually a spittoon to collect their spit. Viewers are offered a cup of saline and asked to swish and then to deposit it into the spittoon. During the opening, I extract the DNA from hundreds of spit samples, containing cheek cells and the cells’ DNA all mixed together. The DNA is not individuated nor retained – it is processed as a composite to make iconic DNA Fingerprint images of power, which are visible as live video projections of the electrophoresis gels throughout the exhibition.

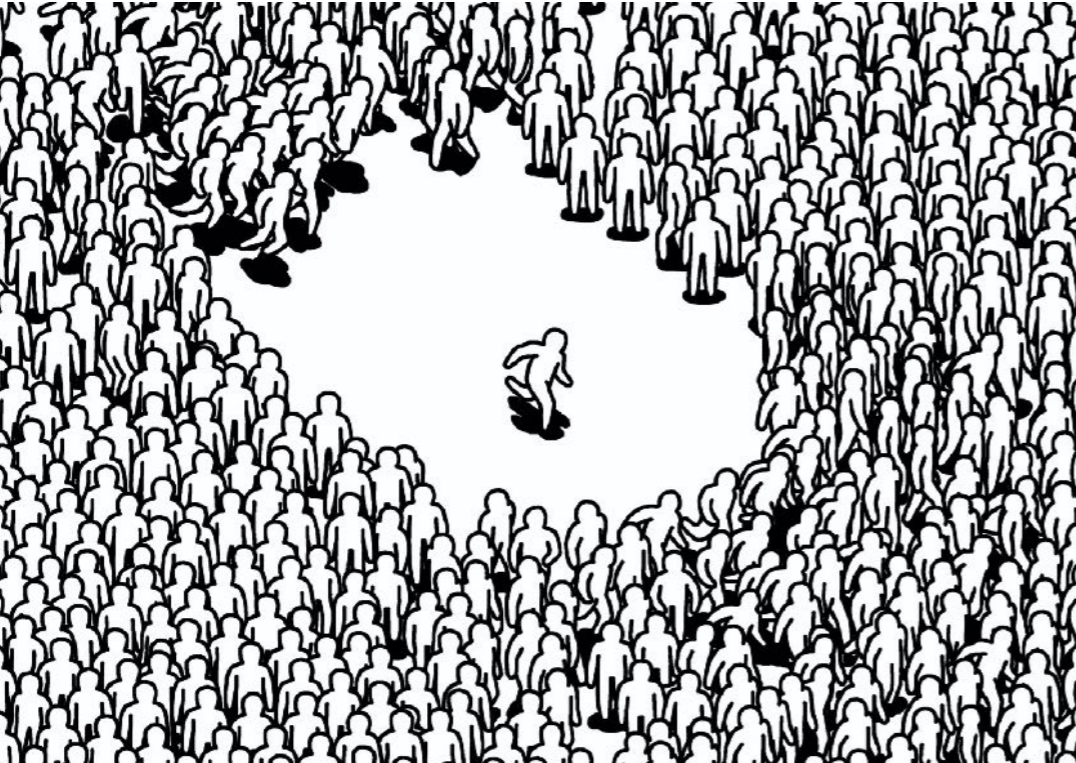

For decades, we’ve been told that DNA was our source of individuation, difference, and legal identity, whereas I’m showing that our DNA is nearly identical – I’m asserting a manifesto of Radical Sameness. The spittoon is a bio-matter anonymizer for de-colonized citizen science. Everyone’s spit is mixed together making individuation impossible. All collected samples are promiscuously comingled. What is visualized is our shared identity, our collectivity. The question is from our shared biopower, are we merely the conduit for monarchial power or will a power of the 99% emerge. The first images produced with the DNA are (1) a crown symbolizing pure, top-down, rigid power, (2) an infinity sign symbolizing endless potential, hope, and possibility, and lastly (3) the US Flag. Its meaning, elusive and underdetermined at the time, now seems perplexing and foreboding in the wake of the US election. The project premiered in Oct./Nov. 2016, a period in which the meaning of the subject/citizen and the meaning of America were being contested and perhaps subverted. The most poetic image was produced in error, during the opening when the DNA gel tore. The disintegrating crown resembled a turbulent cloud, suggesting an unexpected escape from the experimental norm.

The title was chosen to desolidify the concept of America. The term *Project* implies a goal-oriented mission, or experiment, and recollects the utopian plan for America as a melting pot in which the vast landscape would serve as an equalizer. In recent US elections, this utopian residue has catalyzed both visions of social progress (Obama), but also neo-imperialist doctrines of exceptionalism (Bush) and extreme nationalism and xenophobia (Trump). Extreme nationalism is a growing global problem and it is expected that the project will resonate in the European context. The DNA images will include additional icons of national power.

The flag image was created by doing bio-informatics in reverse: first determining the exact size DNA needed to move at varied rates in a gel to form the star field. We amplified segments from a region of human DNA that nearly all humans share so we could predict amplified sizes using freely available online human genome sequences. Producing perfect length dense smears, or stripes, was also challenging and required completely reimagining DNA processing. Solon Morse and I used the trouble-shooting section of a DNA amplification handbook as a “how to” – inverting best practices in which smears are what you try to avoid. Our belief is that the radical appropriation of molecular and bio-informatic tools by the arts can inform the use, deployment, and public perception of technology so vital to our understanding of proof, evidence, and identity.