Report: Link

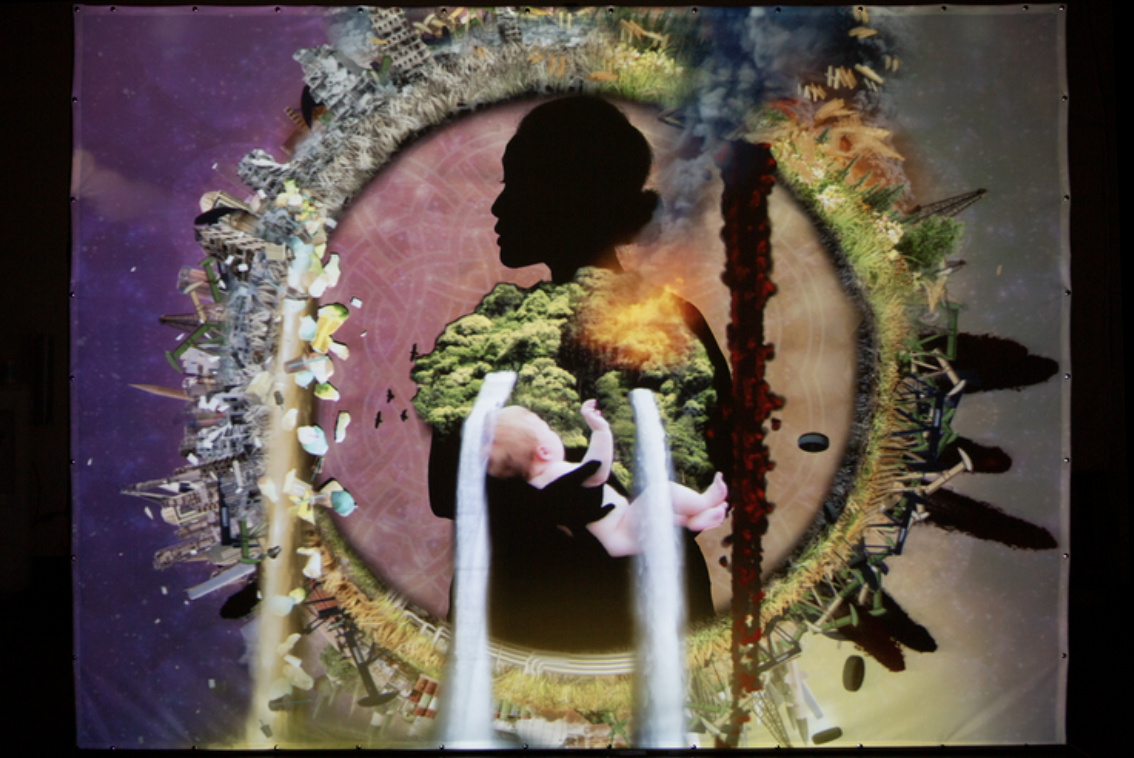

Topic: MACHINE AUGURIES

Author: Dr. Alexandra Daisy Ginsberg, Johanna Just, Ness Lafoy, Ana Maria Nicolaescu

Reference: ARS Electronica Festival 2020 (Interactive Art)

Abstract:

Before sunrise, a redstart begins his solo with a warbling call. Other birds respond, together creating the dawn chorus: a back-and-forth that peaks thirty minutes before and after the sun emerges in the spring and early summer, as birds defend their territory and call for mates. Light and sound pollution from our 24-hour urban lifestyle affects birds, who are singing earlier, louder, for longer, or at a higher pitch. But only those species that adapt survive. Machine Auguries questions how the city might sound with changing, homogenizing, or diminishing bird populations.

In the multi-channel sound installation, a natural dawn chorus is taken over by artificial birds, their calls generated using machine learning. Solo recordings of chiffchaffs, great tits, redstarts, robins, thrushes, and entire dawn choruses were used to train two neural networks (a Generative Adversarial Network, or GAN), pitted against each other to sing. Reflecting on how birds develop their song from each other, a call and response of real and artificial birds spatializes the evolution of a new language. Samples taken from each stage (epoch) in the GAN’s training reveal the artificial birds’ growing lifelikeness.

The composition follows the arc of a dawn chorus, compressed into ten minutes. The listener experiences the sound of a fictional urban parkland, entering in the dim silvery light of pre-dawn. We start with a solo from a lone “natural” redstart. In response, from across the room, we hear an artificial redstart sing back, sampled from an early epoch. A “natural” robin joins the chorus, with a call and response set up between natural and artificial birds. The chorus rises as other species enter, reaching a crescendo five minutes in. As the decline starts and the room illuminates to a warm yellow, we realize that the artificial birds, which have gained sophistication in their song, are dominating.

1987年開始,經過傳統東方療癒體系知識的學習與沈澱,對傳統民俗療法的領域開始相關的學習。

1987年開始,經過傳統東方療癒體系知識的學習與沈澱,對傳統民俗療法的領域開始相關的學習。 Introduction

Introduction